Hacking Hacker News frontpage with GPT-3

In three weeks, I got to the front page five times, received 1054 upvotes, and had 37k people come to my site.

Posting on Hacker News is like a box of chocolates – you never know what you’re gonna get. I’ve been submitting since 2017 and never had more than one point. So I stopped trying.

A month ago, I got access to OpenAI API. After ten minutes of tinkering, I’ve got an idea: what if I could make it write good Hacker News titles for my blog posts? I quickly looked up the most favorited posts, designed the prompt in plain English, and generated my first title. It looked weird. I even doubted for a few minutes if it’s worth sharing. But I was curious – so I closed my eyes and hit submit.

The post went to the moon with 229 points and 126 comments in one day. Fascinated, I continued generating titles (and what would you do?). In three weeks, I got to the front page five times, received 1054 upvotes, and had 37k people come to my site.

Below is everything I’ve learned building a Hacker News post titles generator with OpenAI API, designing GPT-3 prompts, and figuring out how to apply GPT-3 to problems ranging from sales emails to SEO-optimized blog posts. At the end of the post, I cover the broader implications of GPT-3 that became obvious only after a month of working with the tool. If you’re an entrepreneur or an investor who wants to understand the change this tech will drive, you can read my speculations there.

If you have no idea what I’m talking about, read more about GPT-3 in Gwern’s post first, or check OpenAI’s website with demo videos. In my work, I assume you’re already familiar with the API on some basic level.

Feb 16 update: After I published the post, 48 people asked how to apply GPT-3 to their problems. To help them get started with the OpenAI API, I built the first GPT-3 course that covers everything I learned – from use cases to prompt design. If you’re interested, email me here.

How I built GPT-3 HN titles generator

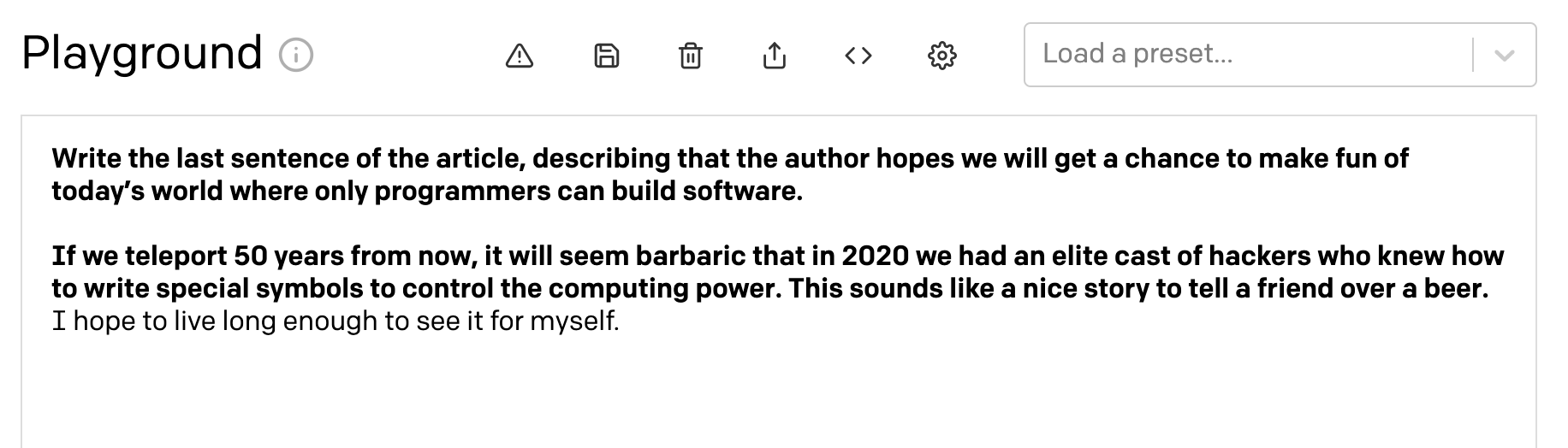

After I got an idea for an HN titles app, I needed to understand how to do it with GPT-3. As there were no tutorials on approaching the problem, I went to the playground and began experimenting.

Generating new titles

1. Finding the data

First, I wanted to see if I could make GPT-3 generate engaging post titles at all. To do that, I needed two things:

- Write a prompt that concisely describes the problem that I want to solve.

- Feed the API some sample data to stimulate the completion.

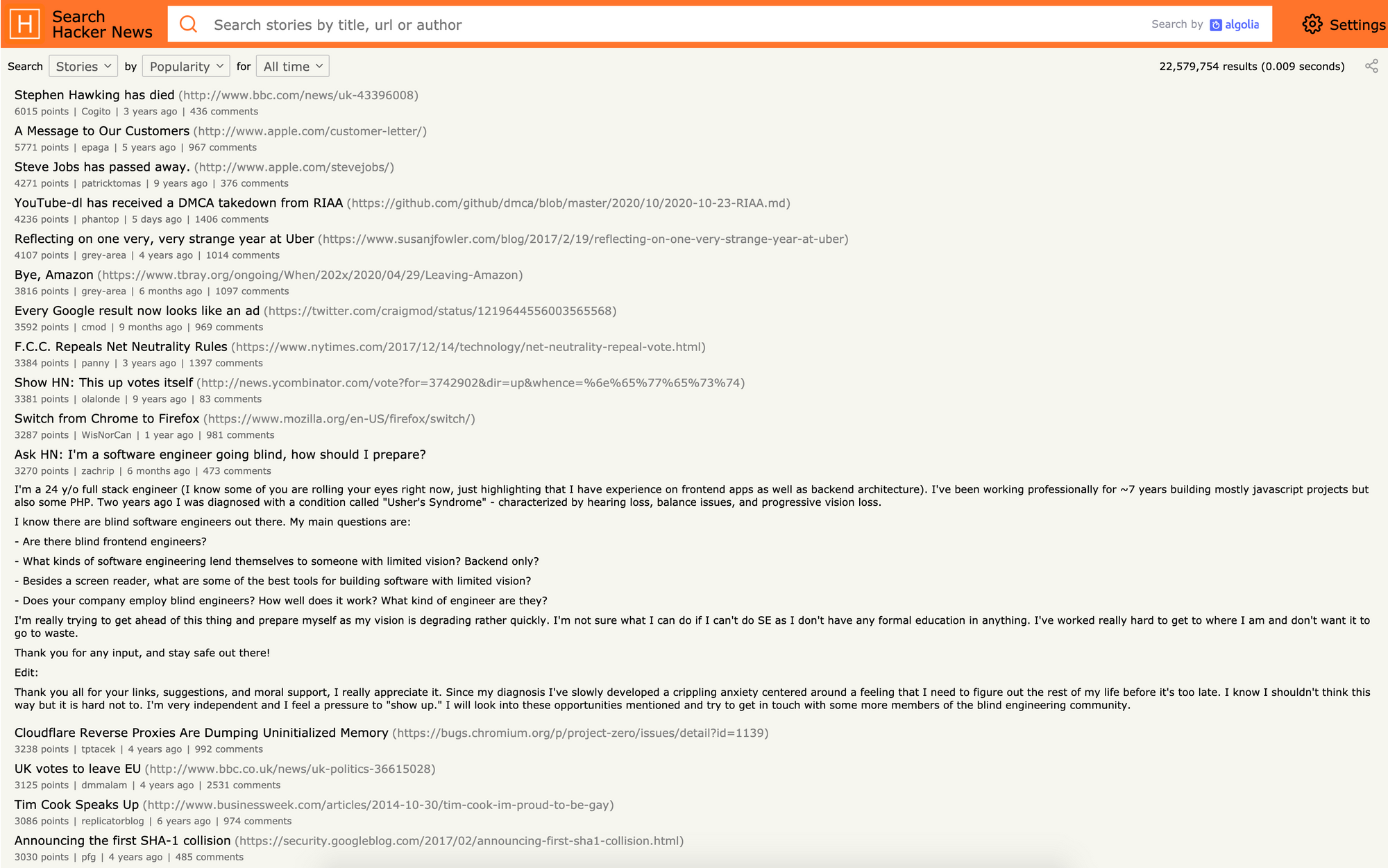

I went ahead and searched for the most upvoted HN posts of all time. In a few minutes, I’ve got an Algolia page with a list of links. But after skimming through them, I figured out that upvotes wouldn’t work. They are mostly news and poorly reflect what kind of content the community values.

Disappointed, I discarded upvotes as a metric. I needed something that would describe the value people get from the post. Something like... bookmarks?

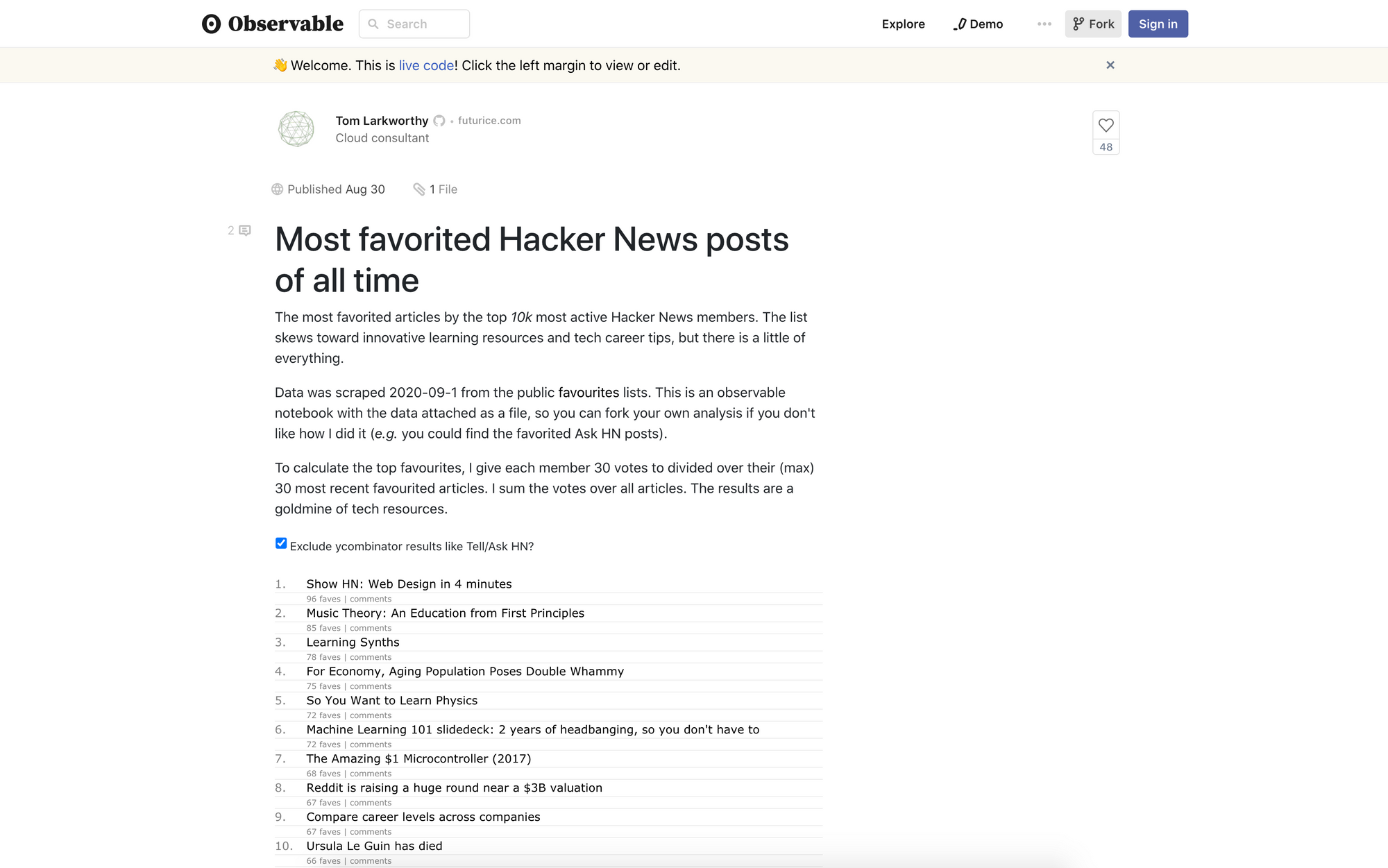

I quickly looked up the most favorited HN posts. The idea was simple: people don’t bookmark news. They favorite things they want to explore later.

The next step was to grab the data from the list, insert it into the playground, and write a clear and concise prompt.

I actually grab data from the dang’s comment rather than the original post; his list was a global one.

2. Designing a prompt

The best way to program the API is to write a direct and straightforward task description, as you would do if you were delegating this problem to a human assistant.

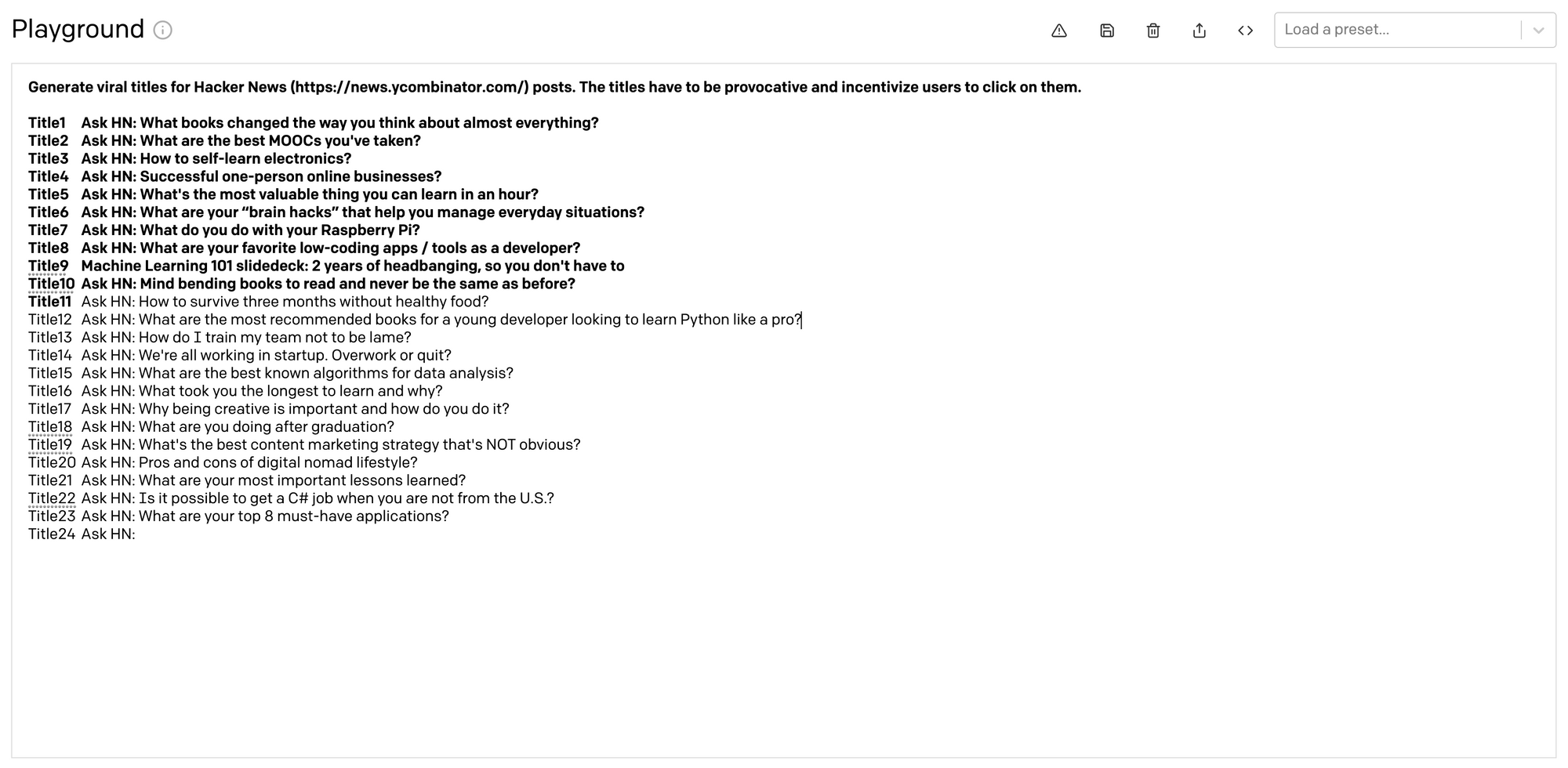

Here’s how my first prompt looked like:

Generate viral titles for Hacker News (https://news.ycombinator.com/) posts. The titles have to be provocative and incentivize users to click on them.

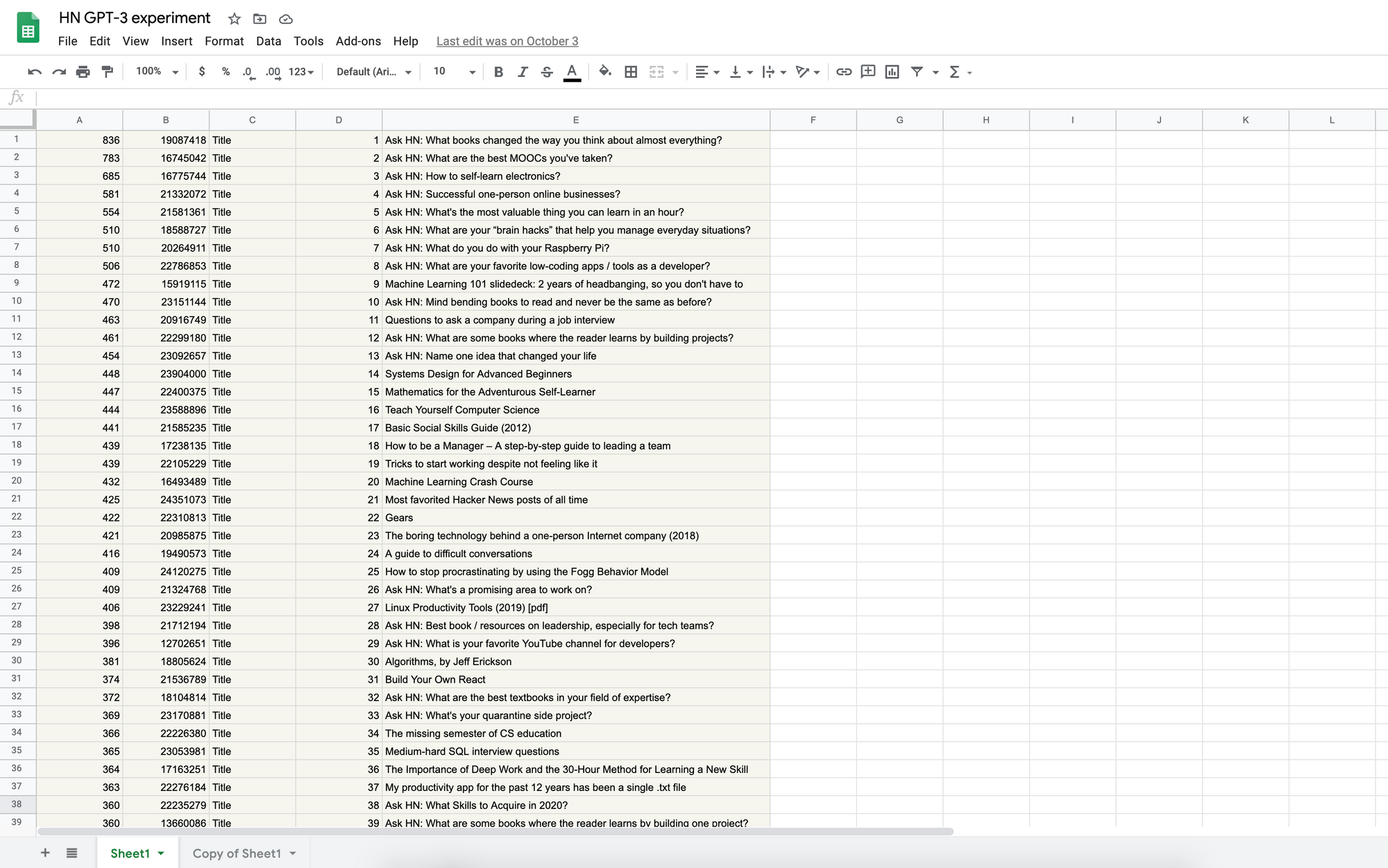

Data-wise, I needed to clean up the list slightly, get rid of irrelevant stuff like IDs, and choose up to five titles to use as a sample – OpenAI team suggests that selecting three to five titles works best for text generation. If you feed the API more examples, it picks up wrong intents and generates irrelevant completions.

In a few minutes of Google Sheets work, the cleanup was done, and I had a data set of the most favorited HN post titles of all time. I put together my first prompt and clicked “Generate.”

3. Tinkering with completions

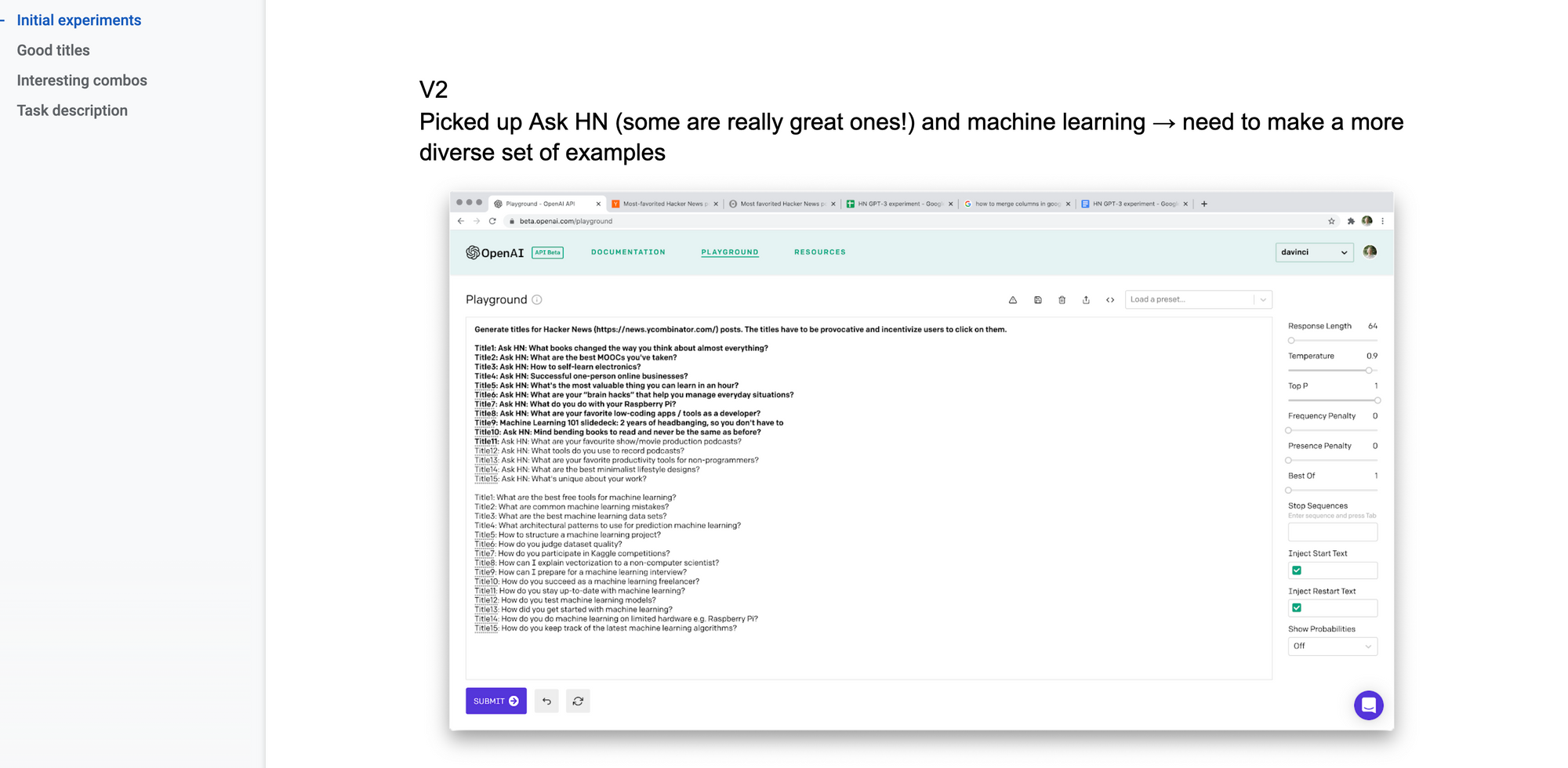

The first completion was unpromising. The list of titles had too many Ask HNs, and GPT-3 picked up questions as a pattern:

To fix that, I cut out half Ask HNs from the dataset and began tinkering.

If there was one thing I could tell someone about GPT-3, it’d be that getting a perfect completion from the first try is a dream. The best stuff comes after dozens of experiments, sometimes after generating completions with the same parameters many times with the Best Of parameter and writing another GPT-3 classifier to discover a good one. Moreover, you need to test the prompt’s quality, data samples, and temperature (“creativity” of responses) individually to understand what you need to improve.

If you’re looking for prompt design tips, head on to chapter 2 of the post.

Here’s a list of experiments I’ve done:

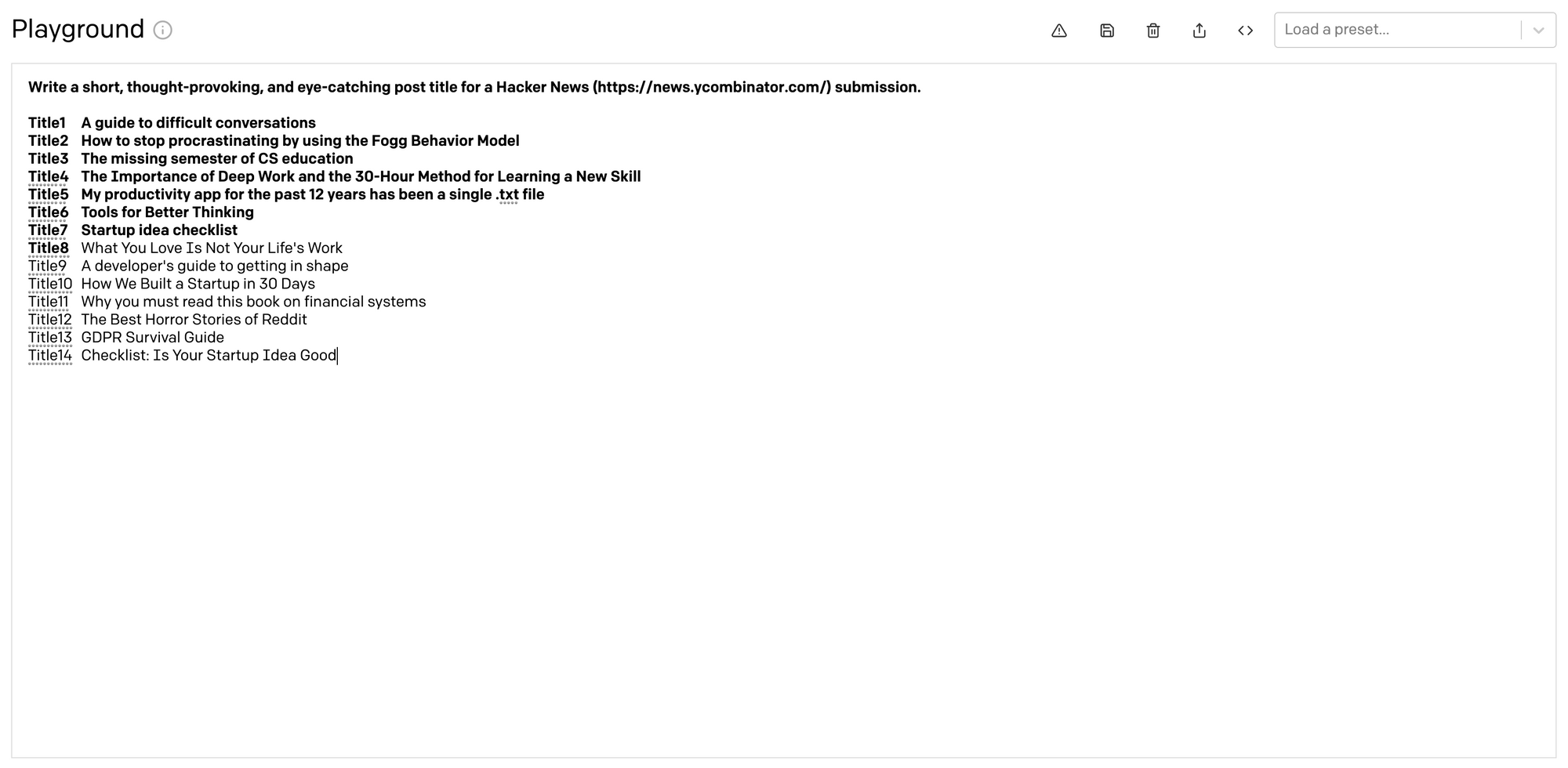

- Edited the prompt many times, including and excluding adjectives from the task description. I tried “catchy,” “provocative,” “thought-provoking,” and many others. The best configuration I’ve got was “Write a short, thought-provoking, and eye-catching post title for a Hacker News (https://news.ycombinator.com/) submission.”

- Split the prompt into two sentences, separating the task and its description. Discovered that one-sentence long prompts work best for simple tasks.

- Played with data samples. I added and removed Ask HNs, randomly sampled from the list of most favorited posts, and tried picking more subjectively thoughtful titles.

- Changed the temperature. The best results came at .9, while anything less than .7 was repetitive and very similar to the samples. Titles generated with temperature 1 were too random and didn’t look like good HN titles at all.

To judge the quality of completions, I’ve come up with a question: “If I saw this on HN, would I click on it?” This helped me move quickly through experiments because I knew what bad results looked like.

After half an hour of tinkering, I’ve got the following completion:

That’s when I realized that I’m onto something. From the list of generated titles above, I’d click on at least three links just out of curiosity. Especially on “A developer’s guide to getting in shape.”

What’s even more interesting, the completion above was a result of fine-tuning the API. Title8, What You Love Is Not Your Life’s Work, was originally a part of another completion that was lame. So I cut out the bad stuff, added Title8 to my data sample, and continued generating from there.

The next step was to see if I could make GPT-3 create a custom title for my blog post.

Generating custom titles

1. Changing the method

To make GPT-3 generate custom titles, I needed to change my approach. I was no longer exploring new, potentially interesting headlines but figuring out how to make a good one for a post that was already written. To do that, I couldn’t just tell the API, “hey, generate me a good one.” I needed to show what a good one actually is and give GPT-3 some idea of what the post is about.

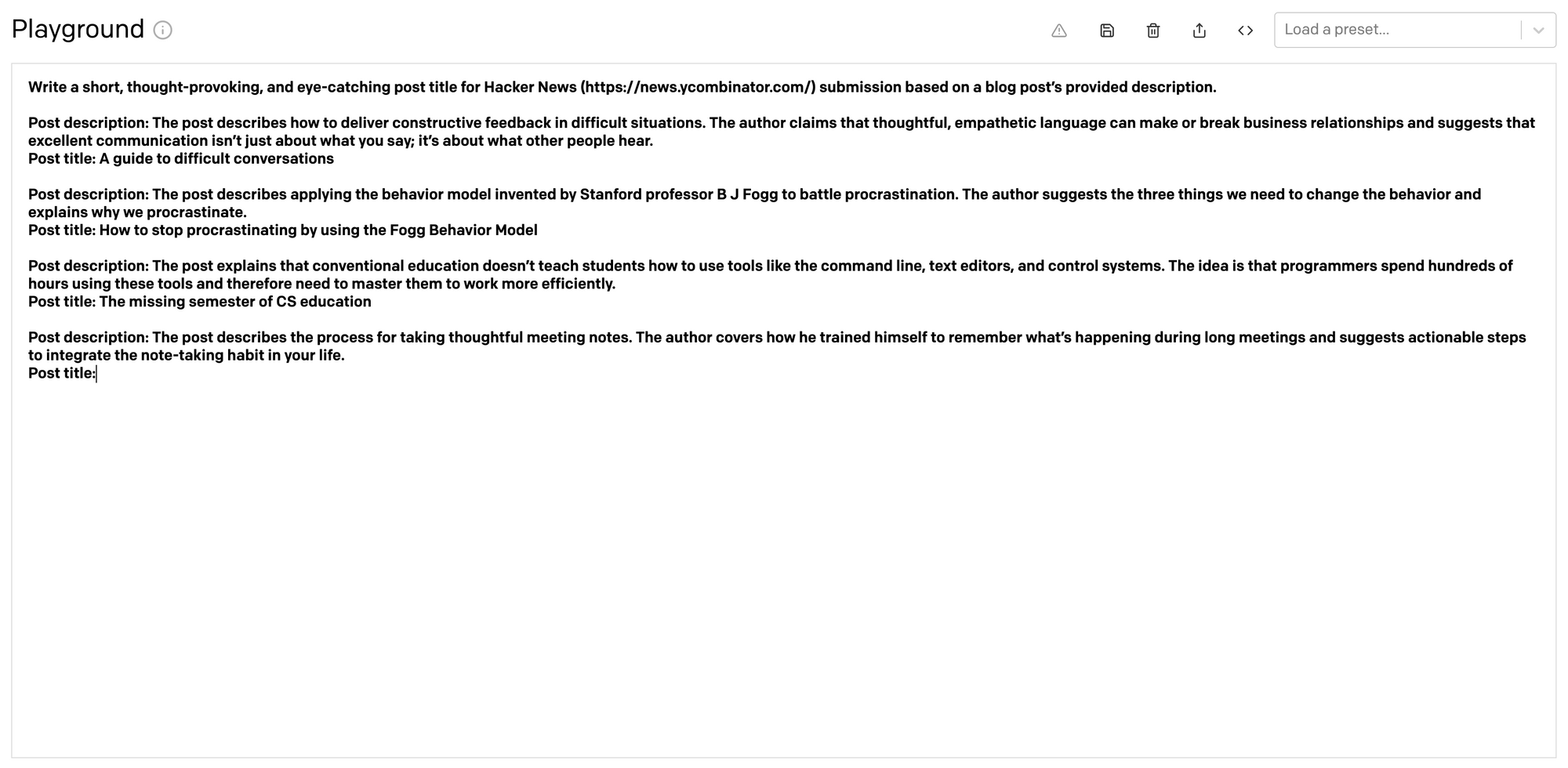

The first thing I changed is the prompt. This was relatively easy because I applied the same model of thinking again – “What would I tell a human assistant if I had to delegate this problem?”

Here’s a prompt that I used:

Write a short, thought-provoking, and eye-catching post title for Hacker News (https://news.ycombinator.com/) submission based on a blog post’s provided description.

One unexpected benefit from tinkering with the prompt is the clarity of thought. When you’re dealing with GPT-3, there’s no way to make it work if you don’t know what you’re doing. You need to express your thought in clear terms. It’s a brilliant natural constraint and one of the most underrated benefits of GPT-3: when you must state clearly what you want to do, you begin to better understand the problem.

2. Updating the data

After I designed a new prompt, I had to update my data set. I went back to my previous experiment, selected five titles from the list of most favorited posts of all time, and wrote a clear and concise description for each of them. Lastly, I wrote a description of my own work that I wanted to generate the title for. I left the title field blank and hit “Generate.”

3. Experimenting with titles

This time, the completions were shockingly good. Because I’ve already learned how to design a prompt and get the data right, I didn’t have to spend much time tuning the parameters. The only thing I changed was the temperature – the best results came at .92.

Here’s a list of titles I generated for my post on studying kung fu in China:

- How a Shaolin monk trains Western students

- I went to a Kung Fu school for a month, and I learned 3 lessons that I will never forget

- How I got a Shaolin monk to teach me Kung Fu in China

- What it’s like to study Kung Fu from a Shaolin monk

- 21 days in the Shaolin Temple

- An edited transcript of my conversation with my Kung Fu master

- How I learned Kung Fu from a monk in China: The full story

- I Spent a Month with a Kung Fu Master in China

- The Shaolin warrior in the cubicle

- Kamikaze training – A brief study in Kung Fu

- Why I traveled to China to study Kung Fu

- I went to a Shaolin Monastery for 6 months to learn Kung Fu: a true story

- I went to the Shaolin Temple to study Kung Fu, and all I got was this lousy blog post

- The monk and the engineer

- What it’s like studying Kung Fu from Shaolin monks for 6 months

- I went to Shaolin and all I got were Kung Fu lessons

And, of course, my all-time favorite one:

When a Shaolin monk met a programmer from the Silicon Valley

But the best titles didn’t come from completions. The most interesting headlines were the ones I ended up thinking of after I saw the completions that GPT-3 generated. It felt as if we were working together – GPT-3 as a writer, and I as an editor. More on that later in the post.

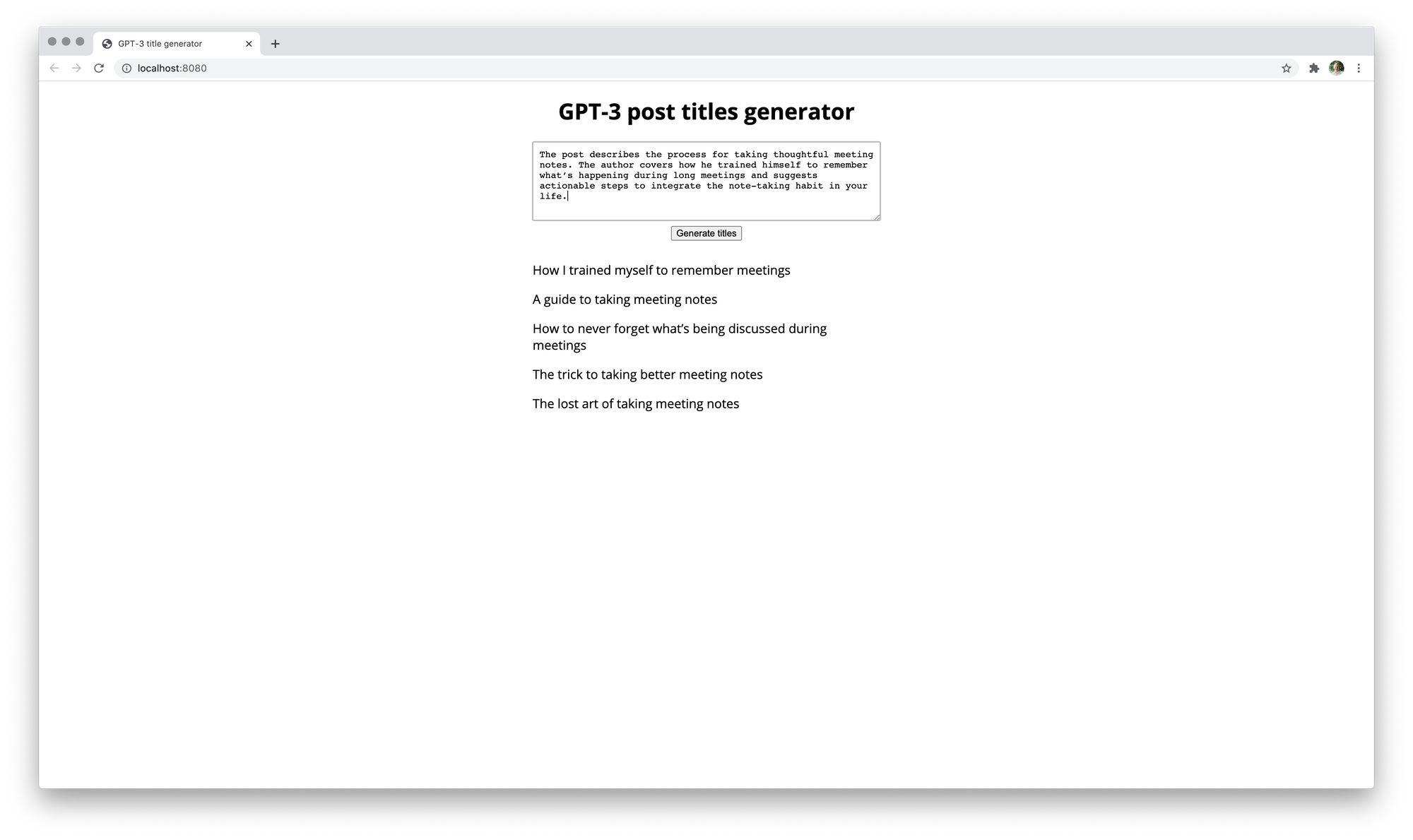

To see more completions at one go, I’ve built a simple React app that sends batch queries to the OpenAI API and displays the titles that come back. Here’s how it looks:

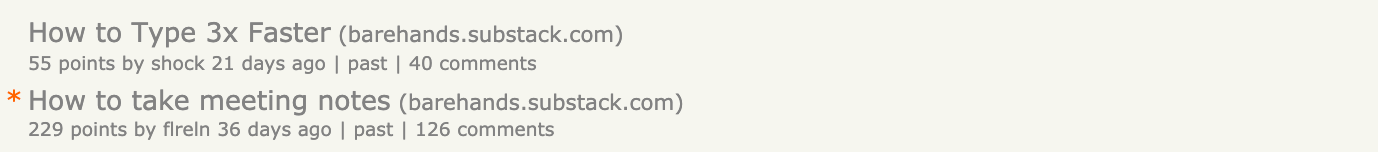

After twenty minutes of tinkering, I’ve got a list of top three titles for my work on meeting notes:

- How I trained myself to remember 95% of a 2h meeting

- The lost art of taking meeting notes

- How to take detailed meeting notes

The list looked good. I went to Hacker News, picked the title I liked the most and hit submit.

Results

My first generated title, “How I trained myself to remember 95% of a 2h meeting,” stayed on a front page for 11h, received 229 upvotes, 129 comments, and brought me 10K website visits and 353 email subs.

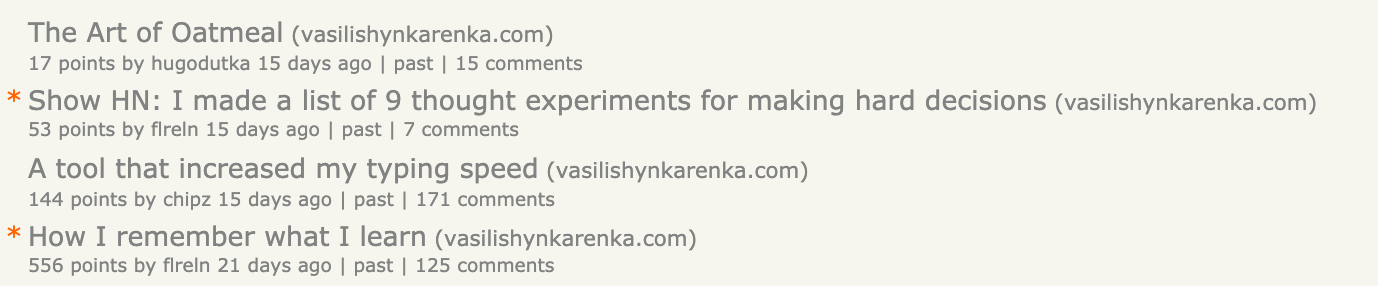

Two weeks later, I did the same thing with my work on how to learn. This one went beyond everything I could have ever imagined: HN front page for more than a day, 556 upvotes, 125 comments, HN newsletter and 30K website visits. Over a hundred people emailed me asking for tips on learning how to code.

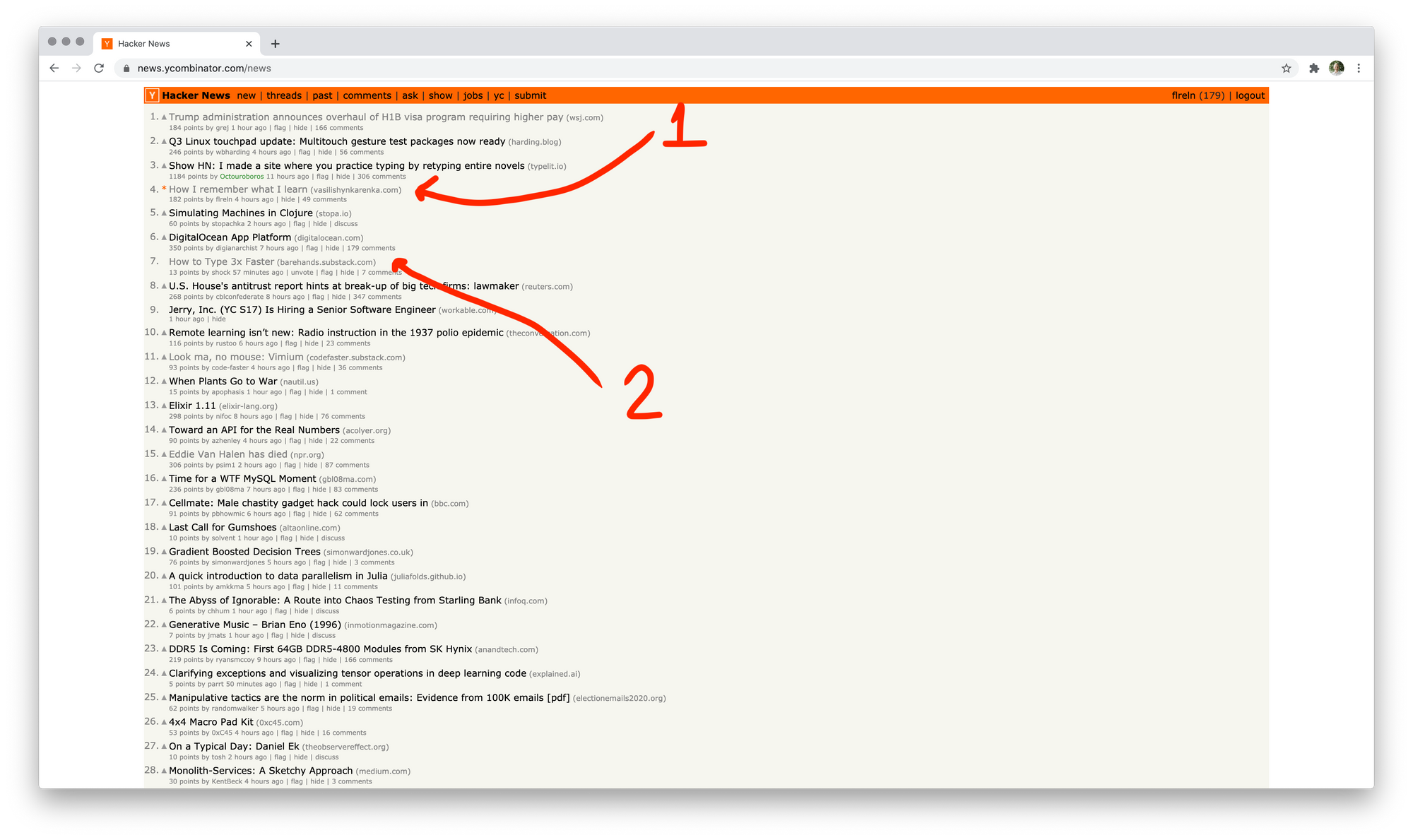

What’s even more surprising, people who came to my site discovered my old works and submitted them to HN for me. At one moment, I was on the front page with two posts at the same time, at #4 and #7. The generator was working.

The basics of GPT-3 prompt design

Three parameters contribute to generating good completions: prompt design, sample data, and temperature. All three significantly impact the output of the model and need to be carefully designed and tested.

If you’re having trouble generating completions, I highly recommend writing down combinations and trying them individually. It’s easy to fall into the trap of thinking that API is terrible when you’re just doing it wrong.

1. Design a prompt

The hardest part of programming GPT-3 is to design a prompt. To get it right, you need a solid understanding of the problem, good grammar skills, and many iterations.

1.a. Understand the problem

To design an excellent prompt, you need to understand what you want the API to do. Programming GPT-3 is very much like writing code but with more room for error because the natural language rules are way more flexible. Like in coding, the most common mistake is not knowing what you want the program to do but blindly bashing the keyboard.

A good mental model here is to think of the API as a human assistant. To come up with a prompt, ask yourself: “How would I describe the problem to my assistant who haven’t done this task before?”

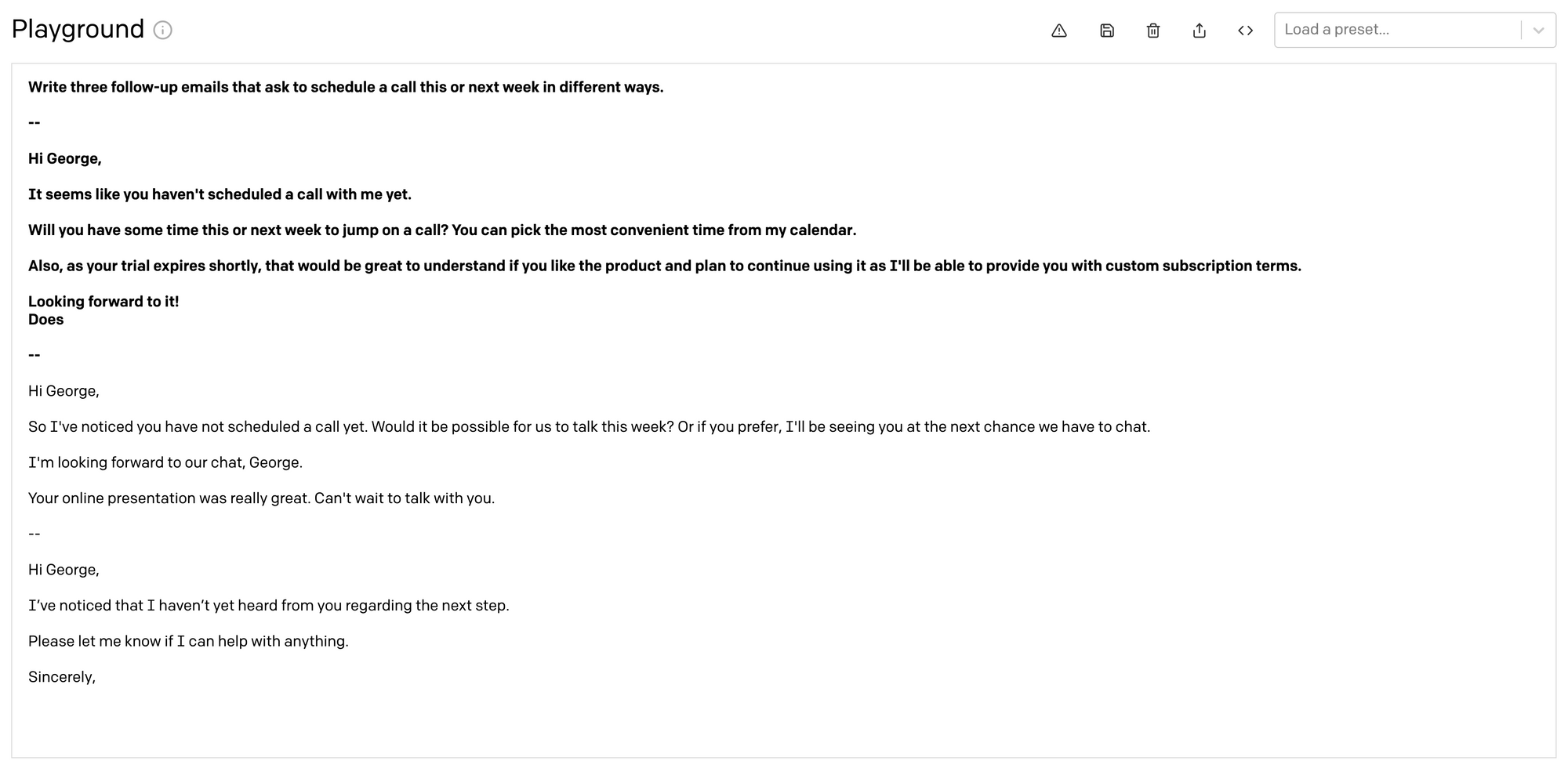

For example, you want to generate a sales follow up email based on a text prompt. To do that, you need to a) write a clear and concise description of the task at the very top; and b) supply the API with sample emails.

To design great prompts, I’ve come up with a simple trick – I draft an email to my friend. I describe what I want the API to do in the email body and provide a few examples of the successful outcome. I do not send this email, but the process of writing restructures my thought: I manage to objectively state the problem instead of writing a vague shortcut as I’d do for myself.

Hypothesis: I assume the email trick works because I’ve already thought in this environment for some time, and my mind picks up the familiar UI.

1.b. Check your grammar

Grammar is the programming language for GPT-3. If you get your grammar wrong or your passage has subtle meanings, you will not get good completions.

Here are a few tips to get your grammar right:

- First, write in simple and clear terms. Do not use complex sentences with predicates. Avoid subtle meanings.

- Make sure your sentences are short. For example, if you want to program the API to generate an SEO-optimized article that includes some keywords, do not write: “Write a high-quality, SEO-optimized article that describes how to use psychology in business and includes two keywords, “psychology” and “business.”” Use the following prompt instead: “Write a high-quality article that describes how to use psychology in business. The article should be SEO-optimized for two keywords: “psychology” and “business.””

- More generally, put specifics to the very end of the prompt. If you’re generating completions based on some keywords, add these units in quotes to the end. This design pattern generates better completions than inserting keywords in the middle of the sentence.

- Play with adjectives to come up with different conversation styles. For example, if you’re making a customer support app and want the bot to be polite, you can specify that in the prompt by mentioning “polite” as a reply quality. Changing the style works well in combination with temperature: you’re more likely to get “friendly” completions if your temperature is high (.8-1) and “official” ones if you go for low temp (0-.5).

As I’m not a native speaker, grammar was a challenge for me. I solved it by using Grammarly, which is an online grammar checker. When designing a prompt, I first draft ideas on paper and then go to Grammarly to make sure I got the grammar right.

If you’re serious about leveling up your grammar and writing, here are the three best materials I’ve ever read:

- The art of nonfiction, a book by Ayn Rand. The most underrated writing book from the author of Atlas Shrugged and The Fountainhead. Ayn outlines the best writing process I’ve ever seen, interleaving psychology, biology, and language. I use a variation of her approach in my work every day.

- Simple & Direct, a book by Jacques Barzun. A go-to book for writing clearly. Jacques explains how to think about writing and what mental models you need to be concise.

- The Elements of Style, a book by William Strunk Jr. A style guide, focused on words and how to use them.

1.c. Test and iterate

No matter how thoughtful your original prompt is, you are unlikely to get excellent completions from the first try. I recommend testing different combinations of all three components – the prompt, sample data, and temperature to get great completions. To do that, you need to keep a temporary work file where you write down combinations you test and add notes on their performance.

The simplest solution I’ve found here is creating a new Google Doc for every problem that I’m solving with GPT-3 and documenting everything in there. I use Google Docs because it’s easy to create and share in the browser (hint: cmd+t, then docs.new), supports images, and tracks history changes. Feel free to use whatever tool works for you – the point is to document your experiments to understand which combinations of prompt, data, and temperature work best.

Two other thoughts on testing:

- When testing a prompt, make sure you get the grammar right first. That’s the most common mistake I’ve found myself committing when solving a problem with GPT-3. I screwed up the prompt and didn’t clearly specify what I want the API to do. To fix wrong completions, rewrite the prompt and generate 5-10 completions with a new one.

- Make sure you’re not changing all parameters at one go. If you are, it will be hard to understand whether it’s the prompt, data, or temperature that affects the completion – test one thing at a time and document what you learned.

2. Get the sample data right

An excellent task description doesn’t yield good completions if you screw up the data. The samples you use depends on the problem you’re solving. That’s why it’s hard to give any general advice about getting the data right – you can solve the same problem in many ways.

For example, you want the API to write a LinkedIn profile description. There are at least three ways to do that:

- Feed the API a set of key-value pairs of a person’s qualities as keywords and their profile description as a text paragraph. Then write the qualities as a key, and generate a profile description as a completion.

- Write a prompt that tells the API to generate a profile description, supply it with the first few sentences, and let the API do the rest.

- Make a few key-value pairs where the key is a one-sentence description of a person you like, and the value is their “about me” section from LinkedIn. To generate a new “about me,” simply write a one-sentence description and specify the task at the top of the prompt.

In the first case, you need to provide the API with the samples close to what you want to get as a result. In the second scenario, you need to just begin the sequence and tell the API to generate the rest. In the third prompt, you need more custom “about me” samples. But in all three cases, the data impacts the tone and voice of the completion, just like the instruction does.

To design a great sample, make sure it’s coherent with the prompt and the temperature setting that you use. For example, if you want to make an app that generates friendly emails from bullet points, both the prompt and samples should indicate that. Still, in different ways: the prompt must include the relevant adjectives such as “friendly,” “sociable,” “casual,” and the samples must be semantically close to the instruction to show the API know what you want.

3. Control the temperature

Temperature is a parameter that controls how “creative” the responses are.

If you want to do some text generation, like writing a story, designing a flag, or drafting an email reply, the temperature must be high. In my Hacker News titles generator app, I’ve found the temperature of .9-.95 to perform best. However, at the temperature of 1, the responses became “too crazy” and often irrelevant. At the temperature of .7-.8, most generated titles were repetitive and boring.

The playground’s initial temperature setting is .7, and many people think the API is not working for them just because they don’t change the default. Make sure you avoid that mistake.

To get the temperature right, test different variations of prompt-data-temp settings. As a rule of thumb, .9 works fine for any creative problem .4 is sufficient for most FAQ apps, and 0 is for strict factual responses.

What I learned after a month of building GPT-3 apps

The learnings can be split into two broad categories: insights about the API itself and broader implications of GPT-3 tech that became obvious only after a month of work. I suggest reading the API insights first to get some context and then jump to the broader, systemic implications of the tech.

API insights

1. Real value comes from suggestions, not completions

My HN titles app’s original idea was to train GPT-3 to understand what a good title is, generate many options, and submit the best one. But when I started playing around with titles, I’ve discovered that the best ones came from slightly changing the completion. When I saw the completion, it sparked my imagination, and I got a better idea that I wouldn’t have discovered otherwise. In other words, the real value of the GPT-3 titles generator came from suggestions, not completions.

Nat Friedman, the CEO of GitHub shares the same idea about GPT-3 programming buddy here (1:25:30):

This means that most people who say that GPT-3 will fully automate creative work are likely wrong. The API will augment creative work and help workers to spot the seed of a great idea quickly. But I believe that for a long time it will be human who’s going to steer the wheel; just like humans are training with computers in chess instead of dropping the game altogether because Deep Blue beat Kasparov.

Six years ago, Peter Thiel wrote in his book Zero to One:

“We have let ourselves become enchanted by big data only because we exoticize technology. We’re impressed with small feats accomplished by computers alone, but we ignore big achievements from complementarity because the human contribution makes them less uncanny. Watson, Deep Blue, and ever-better machine learning algorithms are cool. But the most valuable companies in the future won’t ask what problems can be solved with computers alone. Instead, they’ll ask: how can computers help humans solve hard problems?”

And after tinkering with GPT-3 for six weeks, I believe that GPT-3 tech will help humans solve challenging creative problems by being a second brain that remembers much more than a person does. The real value of GPT-3 for creative work will come from a symbiosis of man and machine, not from the complete automation of creative workers. For writing, this means that writers will produce their masterpieces together with the API. The winners will be the fastest ones to adapt.

2. GPT-3 generates not only solutions but problems

When I first tried generating code, something weird happened. I wanted to create some HTML to see what GPT-3 is capable of. I provided it with a few key-value pairs where the key was a query in a natural language (i.e., “generate an HTML page with an image in the center and two buttons below it”). The value was a piece of HTML code that renders exactly that layout.

Next, I entered a key without a corresponding value and hit “Generate.” As expected, the API completed the key with HTML code that did exactly what the query in plain English was asking. Curious, I hit the “Generate” button again.

But this time, I accidentally increased the number of tokens the API was supposed to generate. And GPT-3 began generating not only a value that completes my key but totally new key-value pairs as well.

In the list below, the queries 2 and 3 are generated by the OpenAI API:

- Write an HTML page for a personal website with an image, a header, a paragraph, and one button – contact me

- Write an HTML page with a big image on top, then an h2 header, a paragraph with a class of description and two big buttons – contact me and subscribe to my newsletter

- Write an HTML page for a portfolio with four images and one paragraph – contact me

The same worked with JS code. When I fed the API with some simple JS functions, it continued generating new ones. I repeated the same with blog titles, sales emails, and profile descriptions – it worked exactly the same in every case.

And then it hit me. If I could make GPT-3 generate not only solutions, but problems as well, then it’s possible to create enormously large pre-built libraries of templates for very much everything.

If you’re making a landing page builder, you could have millions of templates, components, and buttons to choose from. And if you add a rating system where users could vote for the best stuff, you can sort them with no effort. If you’re writing blog posts, this means you could have hundreds of titles and descriptions at your disposal. You could grab the best ones, tweak them a bit, and produce tons of great content.

After tinkering with the API for weeks, I’ve begun to believe that problem generation is one of the most underrated ways to use the API. Think of Canva: the product wouldn’t have worked if they didn’t have all those templates. But they had to build them manually. Now, it’s possible to automate template generation for way more applications than we think.

3. Text API has way more applications than people think

When I first heard of GPT-3, I was skeptical. You can only generate text completions, which means that use cases are just... text? What I didn’t understand back then is how abstract the language actually is. We have words for very much everything, even for things that don’t exist in reality, such as love, fate, or happiness. And this means that the possibilities of text generation extend way beyond writing emails.

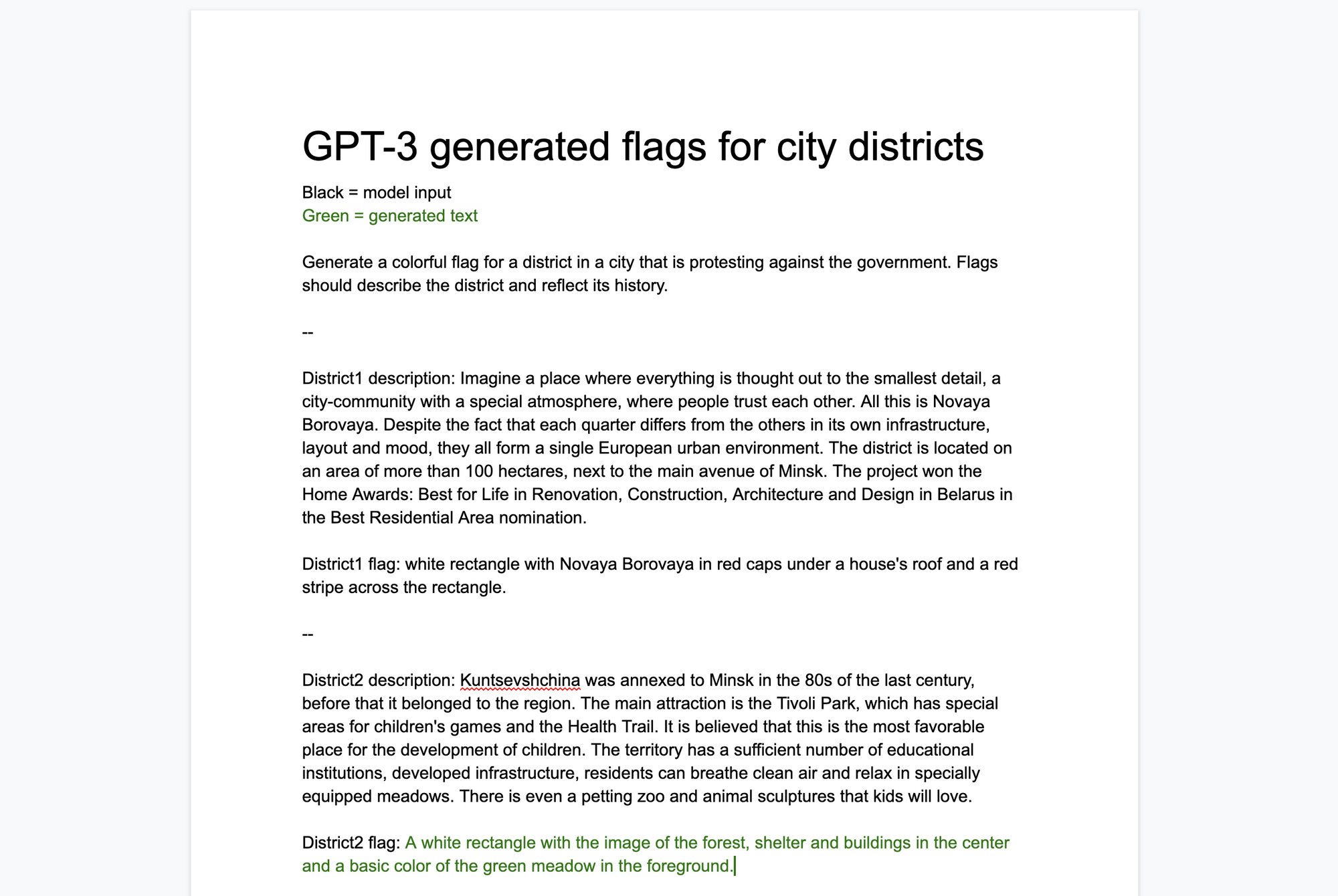

One day, a friend asked me to generate some designs for a neighborhood flag. I replied: “Do you know GPT-3 only works with text?”. But he persisted and asked me to just think about it.

The designs were done in ten minutes. I looked up existing flags and made a few key-value pairs, where the key was a description of the district, and the value was a description of the district’s flag in words. And when I entered a new key-value pair for GPT-3 to complete, I left the flag description blank. It worked flawlessly – the ideas designed by GPT-3 were provocative but super interesting.

My friend was in relief. The reason it worked is that he didn’t need an exact flag design. He needed an idea that could spark one – and that’s precisely what the API did.

Broader implications of GPT-3

Before the 60s, nobody ever ran a sub-four-minute mile. People thought it’s just impossible for a human being to run that fast. And when something is considered impossible, we don’t even bother trying.

But on May 6, 1954, a 25-year-old Roger Bannister broke the 4-minute mile. The conditions in Oxford, England were tough. But he finished in 3 minutes 59.4 seconds anyway, beating the impossible. Roger later said:

“There comes a moment when you have to accept the weather and have an all-out effort and I decided today was the day.”

Bannister’s record lasted just 46 days.

No, runners didn’t just become stronger. Nor running shoes were reinvented. They just knew it’s possible, so they had a target to shoot at.

I believe OpenAI just broke the sub-four-minute mile record in NLP. In the next decade, we’ll see many more runners taking the same approach as the folks at OpenAI did. This will lead to an even faster evolution of the tech and some serious NVIDIA stock growth because everybody will be buying GPUs in enormous amounts.

I’ve been buildings conversational interfaces since 2015, and I haven’t seen anything of that quality in five years. I always had to be careful when typing a message to a bot because I was afraid it would break. I’m not afraid anymore.

And when people stop being afraid, they begin building.

A new era of hobbyists

In the 80s, there was a strange group of people tinkering with personal computers. The idea was a pipe dream, and laymen called them hobbyists. Nobody believed that personal computers would be a thing.

But hobbyists were right. They somehow sensed PCs are going to be a big deal. And since then, everybody in the VC world was looking for new “hobbyists”, trying to spot an emerging platform when it’s still early.

Hobbyists loved personal computers because they could make machines do things. Laymen didn’t know how to make hardware and program computers to do what they needed. That’s why they didn’t care.

When Apple II launched, everything changed. People began seeing a computer differently. It stopped being a large box of transistors in their minds but a useful tool that can help them work, play and grow.

I believe we’re in the same spot right now. For the past decade, general-purpose text-input output API was a pipe dream. Some hobbyists spent years tinkering with deep learning and building special-purpose NLP. But now we’ve got GPT-3, and it’s become 10x easier for non-technical people to tinker with text apps and show what they’re making to others. And I believe the ease of experimentation will unlock way more use cases for the API than we have in our minds now.

OAAP: OpenAI as a Platform?

If OpenAI works, it will power enormous amounts of text API apps, just like Stripe powers payments for the internet.

The number of use cases for text input-output is staggering. From storytelling to code generation to ad copy – all these things will gradually become augmented by well-trained apps.

For example, we are unlikely to have static FAQs on websites just a few years from now. Instead, you will chat with a well-trained robot who replies 24/7, supports generic conversation that reflects the company’s soul, and gives you exactly what you want instead of throwing at you help center articles.

Sam Altman once said that the way to spot a new platform is to pay attention to users:

“A key differentiator is if the new platform is used a lot by a small number of people, or used a little by a lot of people.”

After I started using the API, I got hooked. I have the playground tab open on a separate computer and use it whenever I have a problem.

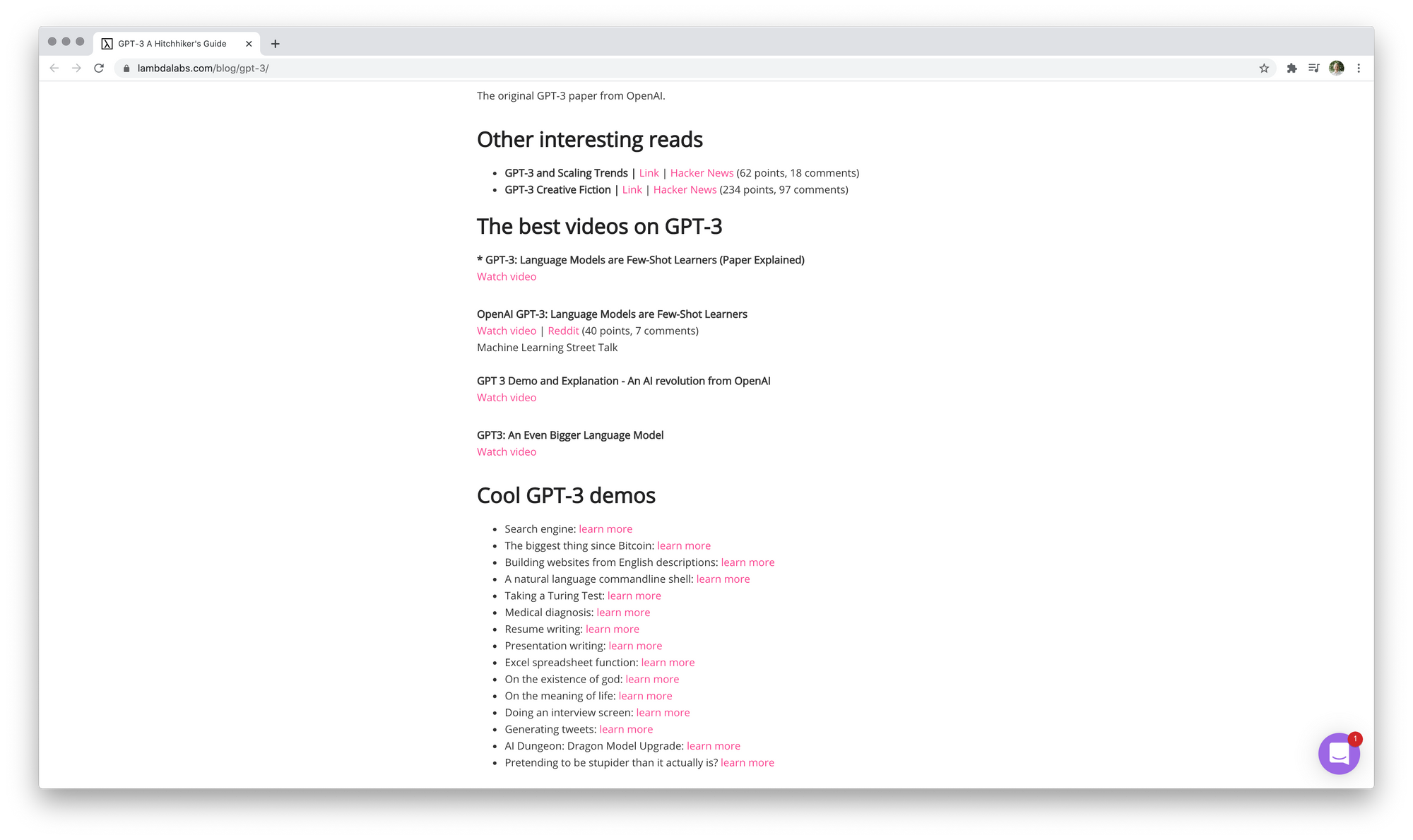

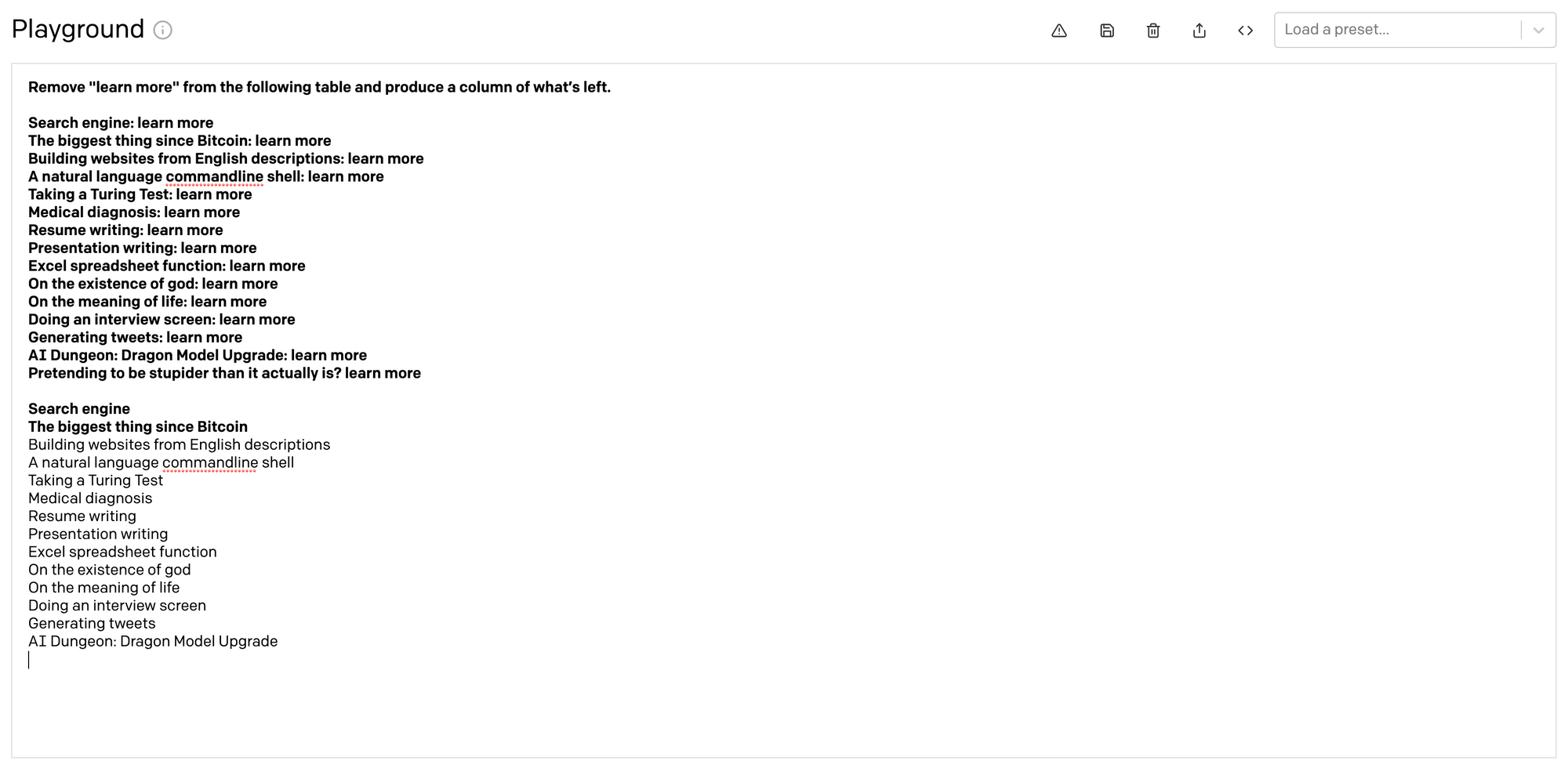

Yesterday, I was putting together a list of best materials on GPT-3 and had to remove “learn more” links from the Cool GPT-3 demos below:

I could’ve done it in Google Sheets. I could’ve done it via Terminal. But I went to the OpenAI playground because it was easier and more natural for me to do.

In ten seconds, I had my data ready:

If we teleport 50 years from now, it will seem barbaric that in 2020 we had an elite cast of hackers who knew how to write special symbols to control the computing power. This sounds like a nice story to tell a friend over a beer. I hope to live long enough to see it for myself.